Photo by Markus Winkler

For half of my career I worked either in mature businesses or well-funded late stage startups with teams big enough to be able to write comprehensive tests. Turned out there is also another side to the story: that’s when you work for an early-stage startup.

At different stages of the company you need developers (and other people) with different set of skills. Hiring a senior engineer that would take several months to architect the perfect scalable platform, and then spend another 6 months re-writing everything from scratch in a different language is not the best fit for a 1-10 person company that wants to move real quick in order to find the product-market fit. At that stage you want to be hyper-agile and be ready to adapt or pivot almost instantly. In that scenario you have barely time to implement a feature or two before realizing some of your older decisions were wrong and you need to throw away that work. So how do you deliver quality software in order to keep your existing customers happy? With testing, of course. Meet the checklist.

Checklists have been in use forever. All of the pilots do a pre-flight check before the take-off. Atul Gawande, the author of the famous checklist manifesto conducted research in the hospitals to see if using checklists before the surgery improved the outcome. And the results were indeed positive with number of complications going down. And as a software engineer you might think why would you need a QA checklist if I can write unit tests? Fair question. Let me explain the evolution of testing at Shipit.

As any startup we launched without any automated tests: no unit tests, no integration tests. Nothing. Just a quick and simple manual run in the browser before deploying to production. Sometimes even no testing at all because why would you need to test such a simple feature? Well, turned out that tiny changes can have a butterfly effect, and a seemingly small feature can break a larger flow.

We then started writing unit tests hoping it would solve the problem. But unit tests are meant for testing separate units of your code, i.e. a single function. And in order to cover all of the parts that the user can touch during their day to day workflow can be huge. During my career I also created tests with bugs inside them which indicated the code in question had no problems whereas in fact it did. Also unit tests take a lot of time to write which is very expensive in a company that has weekly sprints and can change its direction almost instantly. So moved to other types of tests.

Testing that a user can login and be redirected to the roadmap page, viewing password recovery form and having an email field there, or adding item to the roadmap. We used these mini flows could be easily tested as these are exposed via internal API. This helped us

- figure out which are the essential flows that the user would go through daily

- quickly catch the butterfly effect changes in parts of the code that affect others

We have covered most of the user journeys with such tests. However a user doesn’t just login and reset their password. Combined flows can grow into larger stories with lots of connected points. And this is where a decision to compile a checklist for quality assurance came into being. Such a list must adhere to the following requirements:

- ideally tests must cover all of the app’s functionality (and in our case it does)

- each test must cover as much of the requirements as possible

- the number of tests must be minimized

Let’s illustrate this with an example: a new user must be able to sign up with a Google account. This test covers a lot of functionality behind the scenes:

- creation of new user accounts

- creation of new team

- creation of new roadmap

- adding onboarding items, ideas, and goals

- kicking off the onboarding process within the app

- starting an email sequence that explains essential concepts of the app

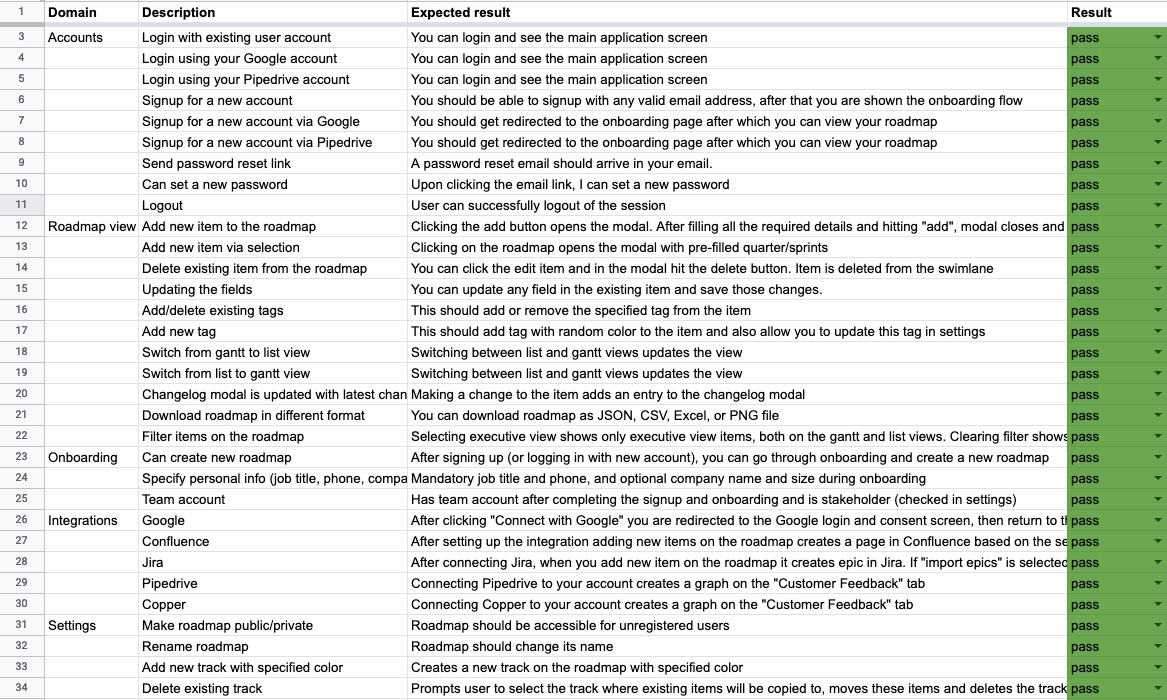

As the complexity of the software grows, these tests help to ensure users are still able to use the app. Our list contains 4 columns:

- Module: a bigger part of the application, for example roadmap, settings screen, or a prioritisation page

- Description: a simple test action, e.g. logout

- Expected result: after running the action what should the expected result be

- Result: a simple color-coded drop-down with 3 values (untested, pass, fail)

For every release candidate we start with all tests in the untested state (yellow color). As we progress, result columns gradually becomes green, sometimes with few red cells. No matter the size of changes in the code, each build goes through a checklist, and until everything is green we do not deploy it.

It is crazy how many times we made small changes and thinking it wouldn’t affect anything only to find out, that one of these checklist tests failed. We also try to develop locally using one browser (e.g. Mozilla Firefox), and test in another browser (Safari and Chrome) which helps to make sure that the code works across a wide range of browsers.

If you would like to get some inspiration, here’s is our checklist we use almost daily.